Introduction to the Hardware Setup for AI Training

Training large AI models such as Large Language Models (LLMs) and diffusion models requires powerful hardware, especially when the training is to take place offline and locally. Such models demand enormous computing power and memory resources to be efficiently trained. In this article, we will show you three possible configurations, ranging from a budget-friendly to an extremely expensive setup. These configurations will help you find the optimal hardware solution for your needs, whether you’re on a tight budget or seeking a high-end setup.

Budget Configuration

For beginners or those on a limited budget, there are hardware options that, while not operating at the highest level, are still capable of training mid-sized models such as smaller LLMs or diffusion models.

- Processor (CPU): AMD Ryzen 9 5950X – A powerful 16-core processor that delivers good performance even with parallel tasks and high workloads.

- Graphics Card (GPU): NVIDIA RTX 3060 – This graphics card offers 12 GB of VRAM and is an affordable choice for training smaller models.

- Memory (RAM): 64 GB DDR4 – A solid minimum to load large datasets and keep the system stable at the same time.

- Storage (ssD): 2 TB NVMe ssD – Fast storage is crucial for efficiently reading and writing data, especially with large model parameters.

- Power Supply: 750W Power Supply – A sufficiently strong power supply is necessary to run the hardware stably.

This configuration offers a good price-performance ratio and can handle smaller AI models like GPT-2 or limited diffusion models. For users just starting in AI training, this is a viable and affordable option.

Expensive Configuration

Those who need more power for larger models like GPT-3 or detailed diffusion models should invest in more powerful hardware. This configuration allows you to train even more demanding models without relying on cloud services.

- Processor (CPU): Intel Core i9-13900K – A high-end processor with 24 cores, ideal for tasks requiring strong parallelization and computing power.

- Graphics Card (GPU): NVIDIA RTX 4090 – With 24 GB of VRAM, this GPU is more than sufficient for most AI tasks and significantly accelerates training.

- Memory (RAM): 128 GB DDR5 – Enough RAM to keep large models and datasets in memory, speeding up training.

- Storage (ssD): 4 TB NVMe ssD – Large models and training data require a lot of storage space. A fast NVMe ssD ensures short load times.

- Power Supply: 1000W Power Supply – The power consumption of this hardware requires a particularly strong power supply.

This configuration offers enough power to efficiently train medium to large models offline. Users who regularly work with large datasets and complex models will find a balanced setup here.

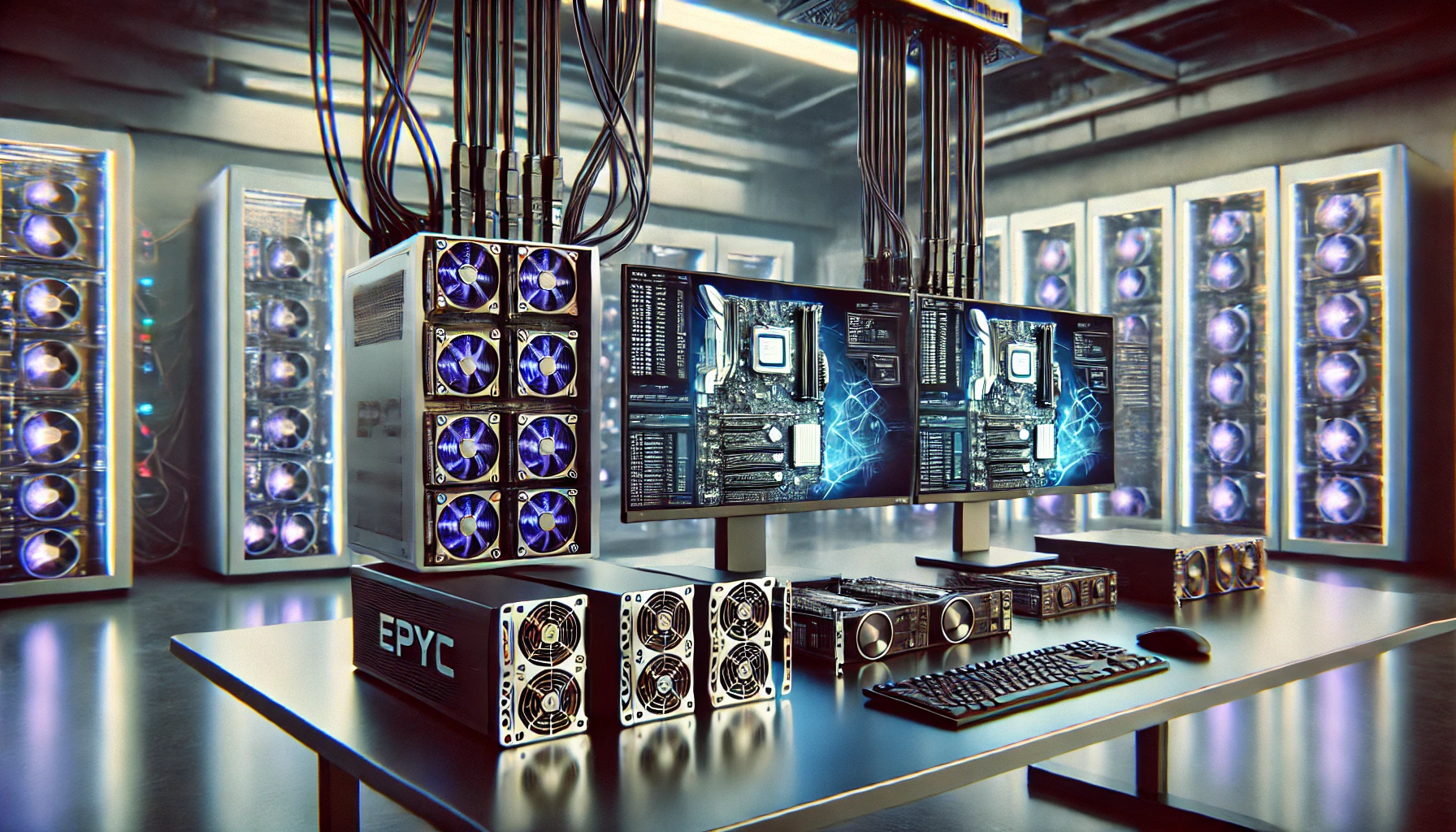

Extremely Expensive Configuration

For those who want the best of the best and work with the largest LLMs or diffusion models available, training requires hardware at an extremely high level. This configuration is aimed at professional research and enterprise applications.

- Processor (CPU): AMD EPYC 7742 – A server-grade processor with 64 cores and hyper-threading that handles even the most intense computing loads.

- Graphics Card (GPU): NVIDIA A100 Tensor Core – This professional GPU, with 80 GB HBM2e memory, is optimized specifically for training AI models.

- Memory (RAM): 1 TB DDR5 – Large LLMs like GPT-4 require enormous amounts of memory to be efficiently loaded and trained.

- Storage (ssD): 8 TB NVMe ssD – Large datasets and models require plenty of space and fast access. An NVMe ssD of this size and speed is indispensable here.

- Power Supply: 2000W Power Supply – The power consumption in this configuration is massive and requires an appropriate power supply.

This configuration is for institutions or companies that want to train the largest and most complex models, like GPT-4 or massive diffusion models, locally. This hardware offers maximum performance and is designed for scalability.

Training large AI models like LLMs or diffusion models requires well-thought-out hardware setups. While a budget setup is sufficient for beginners, professionals and companies will want to opt for more powerful configurations. These three variants – from budget to extremely expensive – show the different options available for local and offline AI model training.